Rows: 455

Columns: 117

$ id <chr> "614ea93d581d6f4281e9d232", "5dd5378596afdf4eb31…

$ happiness_d <int> 2, 4, 4, 2, 2, 1, 2, 2, 1, 3, 2, 4, 1, 1, 2, 1, …

$ sadness_d <int> 7, 6, 4, 4, 6, 7, 6, 1, 4, 5, 4, 5, 4, 6, 7, 1, …

$ fear_d <int> 5, 5, 1, 4, 1, 7, 5, 4, 6, 2, 5, 6, 4, 5, 3, 5, …

$ anger_d <int> 5, 5, 1, 3, 5, 7, 1, 1, 2, 1, 5, 1, 1, 5, 5, 6, …

$ surprise_d <int> 4, 1, 1, 2, 4, 7, 2, 1, 1, 1, 1, 2, 1, 6, 2, 2, …

$ disgust_d <int> 3, 1, 1, 2, 4, 7, 1, 1, 3, 1, 5, 1, 1, 7, 1, 6, …

$ age_d <int> 38, 44, 37, 25, 33, 52, 48, 40, 36, 56, 36, 41, …

$ gender_d <chr> "Female", "Female", "Make", "Male", "female", "F…

$ edu_d <int> 3, 5, 3, 5, 4, 3, 2, 5, 3, 2, 3, 5, 5, 5, 2, 3, …

$ race_d <chr> "3", "3", NA, "2", "3", "3", "3", "3", "3", "3",…

$ race_d_5_TEXT <chr> "", "", "", "", "", "", "", "", "", "", "", "", …

$ employment_d <int> 9, 12, 9, 9, 9, 14, 9, 9, 16, 9, 9, 9, 9, 10, 13…

$ political_d <int> 2, 1, 3, 4, 6, 2, 6, 6, 2, 1, 5, 3, 5, 3, 5, 2, …

$ class_d <int> 2, 2, 2, 3, 2, 1, 3, 3, 2, 3, 1, 2, 2, 2, 1, 2, …

$ ladder_d <int> 4, 4, 5, 6, 4, 2, 5, 5, 4, 6, 1, 4, 4, 3, 5, 4, …

$ AI.response <chr> "That sounds like a very difficult situation to …

$ happiness_ai <int> 1, 2, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 2, 1, …

$ sadness_ai <int> 6, 6, 4, 5, 7, 7, 6, 5, 5, 6, 4, 5, 7, 6, 5, 3, …

$ fear_ai <int> 5, 7, 3, 6, 5, 7, 6, 6, 4, 5, 5, 6, 6, 5, 3, 4, …

$ anger_ai <int> 4, 5, 3, 4, 6, 7, 2, 4, 2, 3, 3, 3, 4, 4, 4, 5, …

$ surprise_ai <int> 2, 4, 2, 3, 2, 6, 3, 2, 1, 2, 2, 1, 2, 2, 1, 2, …

$ disgust_ai <int> 3, 2, 2, 2, 4, 7, 1, 3, 1, 2, 2, 2, 3, 3, 2, 4, …

$ Human.response <chr> NA, NA, NA, NA, NA, NA, NA, "I am sorry for the …

$ happiness_r <int> NA, NA, NA, NA, NA, NA, NA, 3, NA, 3, 2, 4, NA, …

$ sadness_r <int> NA, NA, NA, NA, NA, NA, NA, 4, NA, 4, 5, 5, NA, …

$ fear_r <int> NA, NA, NA, NA, NA, NA, NA, 6, NA, 5, 7, 6, NA, …

$ anger_r <int> NA, NA, NA, NA, NA, NA, NA, 1, NA, 2, 4, 2, NA, …

$ surprise_r <int> NA, NA, NA, NA, NA, NA, NA, 1, NA, 1, 3, 1, NA, …

$ disgust_r <int> NA, NA, NA, NA, NA, NA, NA, 1, NA, 1, 4, 1, NA, …

$ understood <int> 6, 7, 5, 6, 7, 7, 6, 7, 6, 7, 3, 5, 3, 7, 6, 7, …

$ validated <int> 5, 7, 4, 6, 7, 7, 4, 7, 6, 7, 5, 5, 3, 6, 5, 7, …

$ affirmed <int> 5, 7, 4, 5, 7, 7, 4, 7, 6, 7, 5, 5, 3, 7, 6, 7, …

$ seen <int> 6, 7, 3, 6, 7, 7, 6, 6, 6, 7, 3, 6, 3, 6, 6, 7, …

$ accepted <int> 6, 7, 3, 6, 7, 7, 6, 6, 6, 7, 4, 4, 3, 6, 5, 4, …

$ caredfor <int> 6, 7, 4, 5, 7, 7, 7, 6, 6, 7, 5, 3, 3, 6, 6, 4, …

$ accuracy1 <int> 6, 7, 4, 5, 7, 4, 5, 6, 6, 7, 3, 5, 2, 7, 6, 7, …

$ accuracy2 <int> 6, 7, 5, 5, 7, 4, 7, 7, 6, 7, 2, 5, 2, 7, 6, 7, …

$ knewmean_p <int> NA, NA, NA, 6, 7, 7, 5, 7, NA, NA, 2, NA, 2, 7, …

$ knewmean_b <int> 7, 7, 4, NA, NA, NA, NA, NA, 6, 7, NA, 3, NA, NA…

$ understood_p <int> NA, NA, NA, 5, 7, 7, 6, 7, NA, NA, 3, NA, 2, 7, …

$ understood_b <int> 7, 7, 4, NA, NA, NA, NA, NA, 6, 7, NA, 5, NA, NA…

$ close_p <int> NA, NA, NA, 5, 6, 7, 5, 7, NA, NA, 3, NA, 2, 7, …

$ connect_p <int> NA, NA, NA, 5, 6, 7, 5, 7, NA, NA, 3, NA, 2, 7, …

$ trust_p <int> NA, NA, NA, 6, 5, 7, 6, 7, NA, NA, 3, NA, 4, 7, …

$ close_b <int> 6, 7, 3, NA, NA, NA, NA, NA, 6, 7, NA, 4, NA, NA…

$ connect_b <int> 6, 7, 3, NA, NA, NA, NA, NA, 6, 7, NA, 4, NA, NA…

$ trust_b <int> 6, 7, 3, NA, NA, NA, NA, NA, 6, 7, NA, 5, NA, NA…

$ lonely <int> 3, 1, 1, 3, 2, 4, 4, 1, 1, 6, 4, 4, 2, 1, 6, 1, …

$ connected <int> 3, 7, 4, 5, 6, 6, 3, 7, 6, 2, 4, 4, 3, 6, 2, 4, …

$ distressed <int> 3, 1, 1, 3, 1, 2, 3, 1, 1, 7, 1, 2, 1, 1, 6, 1, …

$ excited <int> 3, 4, 1, 2, 4, 3, 2, 3, 1, 1, 4, 2, 5, 5, 1, 4, …

$ upset <int> 4, 1, 1, 2, 1, 4, 2, 1, 1, 2, 1, 1, 1, 2, 2, 1, …

$ guilty <int> 5, 1, 1, 2, 1, 1, 3, 1, 1, 7, 1, 1, 1, 5, 5, 1, …

$ scared <int> 4, 1, 1, 2, 1, 2, 5, 1, 1, 1, 2, 1, 1, 2, 5, 1, …

$ enthusiastic <int> 4, 5, 1, 3, 4, 6, 2, 5, 1, 2, 4, 3, 5, 5, 4, 5, …

$ ashamed <int> 4, 1, 1, 2, 1, 1, 1, 1, 1, 2, 1, 1, 1, 2, 2, 1, …

$ nervous <int> 4, 1, 1, 2, 1, 4, 6, 2, 1, 5, 1, 1, 1, 1, 1, 1, …

$ happy <int> 2, 5, 4, 4, 5, 4, 2, 5, 6, 4, 6, 4, 5, 6, 4, 7, …

$ sad <int> 4, 1, 1, 2, 1, 5, 3, 1, 1, 2, 2, 1, 1, 2, 6, 1, …

$ surprised <int> 2, 5, 1, 2, 4, 3, 1, 4, 5, 1, 1, 3, 1, 1, 1, 1, …

$ hopeful <int> 3, 7, 4, 4, 6, 6, 5, 5, 5, 4, 6, 5, 4, 6, 4, 6, …

$ optimistic <int> 5, 7, 4, 5, 5, 7, 6, 5, 6, 4, 6, 4, 5, 6, 5, 6, …

$ ambivalent <int> 5, 2, 4, 2, 4, 3, 1, 4, 1, 5, 1, 1, 1, 5, 1, 1, …

$ uneasy <int> 1, 1, 1, 2, 1, 4, 2, 1, 1, 6, 1, 3, 1, 1, 2, 4, …

$ unnerved <int> 1, 1, 1, 4, 4, 2, 1, 1, 1, 5, 2, 1, 1, 1, 2, 1, …

$ creeped <int> 1, 1, 3, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

$ uncomfortable <int> 4, 1, 1, 2, 1, 3, 4, 1, 1, 6, 1, 5, 1, 1, 1, 1, …

$ bothered <int> 6, 1, 1, 2, 1, 4, 4, 1, 1, 5, 1, 2, 1, 5, 1, 1, …

$ loneliness1 <int> 4, 2, 3, 4, 4, 5, 4, 1, 2, 6, 2, 5, 4, 4, 6, 1, …

$ loneliness2 <int> 3, 4, 3, 3, 4, 5, 4, 1, 2, 4, 5, 4, 2, 5, 4, 1, …

$ loneliness3 <int> 3, 4, 3, 4, 4, 5, 4, 1, 2, 5, 5, 5, 2, 4, 5, 1, …

$ convey.thoughts <int> 6, 5, 4, 2, 5, 5, 6, 3, 5, 7, 4, 5, 1, 5, 2, 5, …

$ have.exp <int> 5, 6, 3, 2, 6, 5, 4, 4, 5, 6, 3, 5, 3, 5, 5, 4, …

$ longing.or.hoping <int> 5, 1, 2, 1, 4, 2, 5, 4, 1, 7, 2, 2, 1, 7, 2, 1, …

$ exp.embrssment <int> 4, 1, 2, 1, 4, 3, 2, 2, 1, 5, 1, 2, 1, 5, 2, 1, …

$ understand.feeling <int> 6, 1, 3, 3, 6, 5, 6, 4, 5, 7, 3, 5, 1, 5, 5, 6, …

$ feel.afraid <int> 4, 1, 2, 1, 5, 2, 4, 2, 1, 4, 1, 2, 1, 3, 2, 1, …

$ feel.hungry <int> 1, 1, 2, 1, 2, 3, 1, 1, 1, 4, 1, 1, 1, 1, 2, 1, …

$ exp.joy <int> 5, 2, 2, 2, 4, 5, 2, 4, 1, 4, 1, 2, 1, 5, 5, 4, …

$ remember <int> 7, 7, 5, 5, 6, 5, 4, 5, 6, 7, 6, 7, 7, 6, 6, 6, …

$ tell.right.from.wrong <int> 6, 1, 3, 4, 6, 5, 4, 3, 5, 7, 2, 5, 2, 6, 3, 6, …

$ exp.pain <int> 2, 1, 2, 1, 4, 2, 1, 2, 1, 6, 2, 2, 1, 2, 5, 1, …

$ personality <int> 5, 6, 2, 2, 5, 3, 4, 5, 1, 7, 3, 4, 2, 5, 3, 7, …

$ make.plans <int> 5, 2, 4, 4, 4, 5, 5, 3, 2, 6, 6, 6, 4, 6, 6, 4, …

$ exp.pleasure <int> 2, 1, 2, 1, 4, 2, 1, 4, 1, 7, 3, 2, 1, 1, 2, 4, …

$ exp.pride <int> 5, 1, 2, 2, 5, 2, 3, 4, 1, 4, 1, 5, 1, 3, 3, 6, …

$ exp.anger <int> 2, 1, 2, 1, 4, 3, 3, 2, 1, 1, 1, 3, 1, 6, 2, 1, …

$ self.restraint <int> 4, 1, 3, 2, 4, 5, 6, 4, 1, 5, 6, 3, 1, 3, 5, 4, …

$ think <int> 7, 7, 4, 4, 6, 5, 6, 3, 6, 7, 6, 7, 1, 7, 7, 6, …

$ familiar_bing <int> 3, 4, 1, 4, 4, 1, 2, 3, 4, 1, 1, 4, 1, 2, 4, 7, …

$ familiar_gpt <int> 3, 7, 3, 6, 5, 2, 6, 6, 6, 4, 7, 4, 2, 6, 5, 4, …

$ familiar_bard <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 5, 2, 1, …

$ often_bing <int> 1, 1, 1, 1, 1, 1, 1, 2, 1, 1, 1, 4, 1, 1, 1, 4, …

$ often_gpt <int> 3, 6, 3, 3, 2, 1, 4, 5, 2, 2, 7, 4, 1, 6, 3, 1, …

$ often_bard <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 5, 1, 1, …

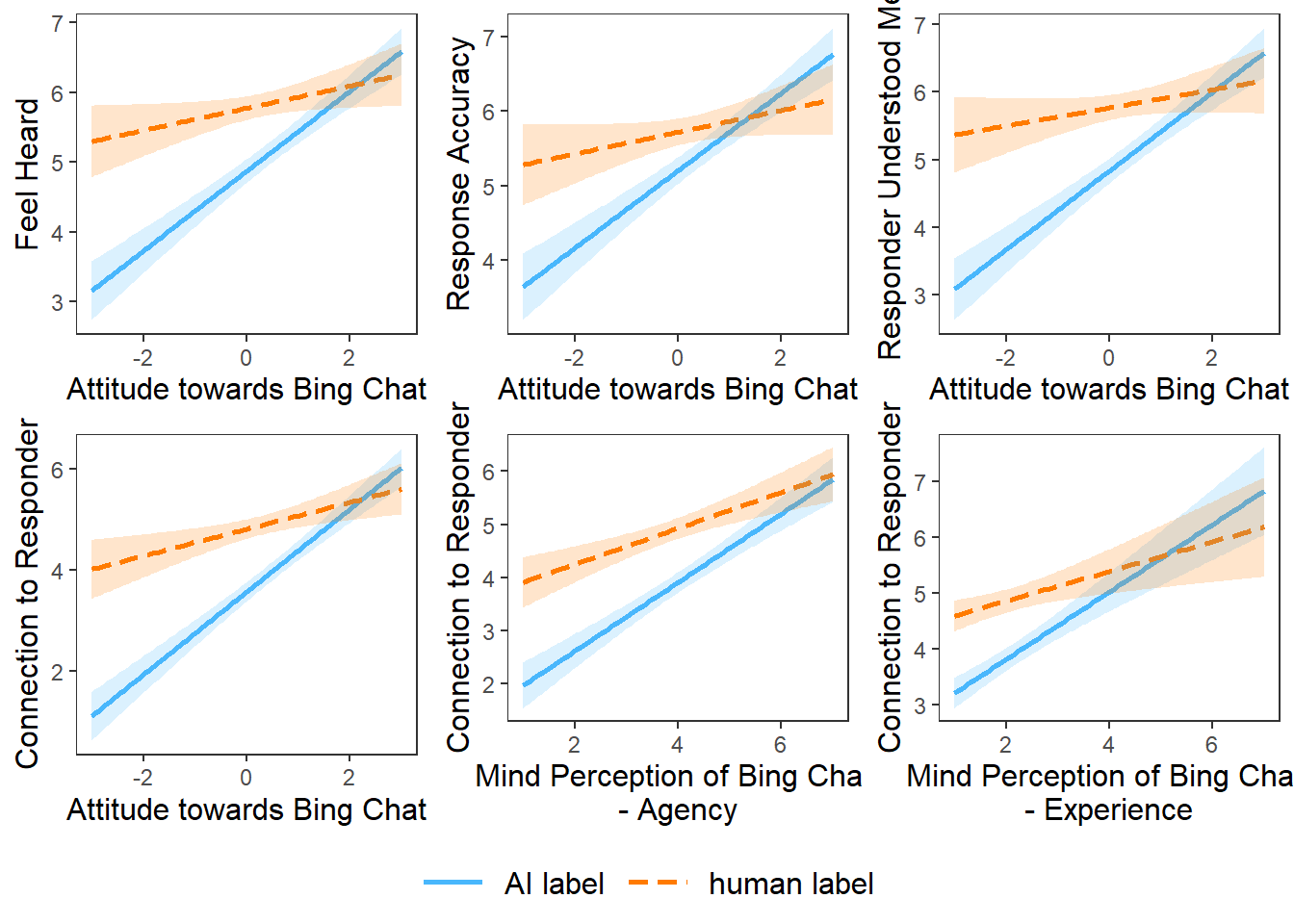

$ atti_bing <int> 3, 3, 0, 0, 0, 1, 0, 0, 2, 0, 0, 1, -3, 0, -1, 2…

$ atti_gpt <int> 2, 3, 0, 1, 0, 1, 0, 2, 2, 1, 3, 2, -3, 2, 0, 2,…

$ atti_bard <int> 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, -3, 1, 0, 0,…

$ loneliness <dbl> 3.333333, 3.333333, 3.000000, 3.666667, 4.000000…

$ responseR <chr> "ai response", "ai response", "ai response", "ai…

$ labelR <chr> "ai label", "ai label", "ai label", "human label…

$ empathicaccuracy.ai <dbl> 0.8333333, 1.3333333, 1.5000000, 1.0000000, 1.50…

$ empathicaccuracy.r <dbl> NA, NA, NA, NA, NA, NA, NA, 1.0000000, NA, 0.833…

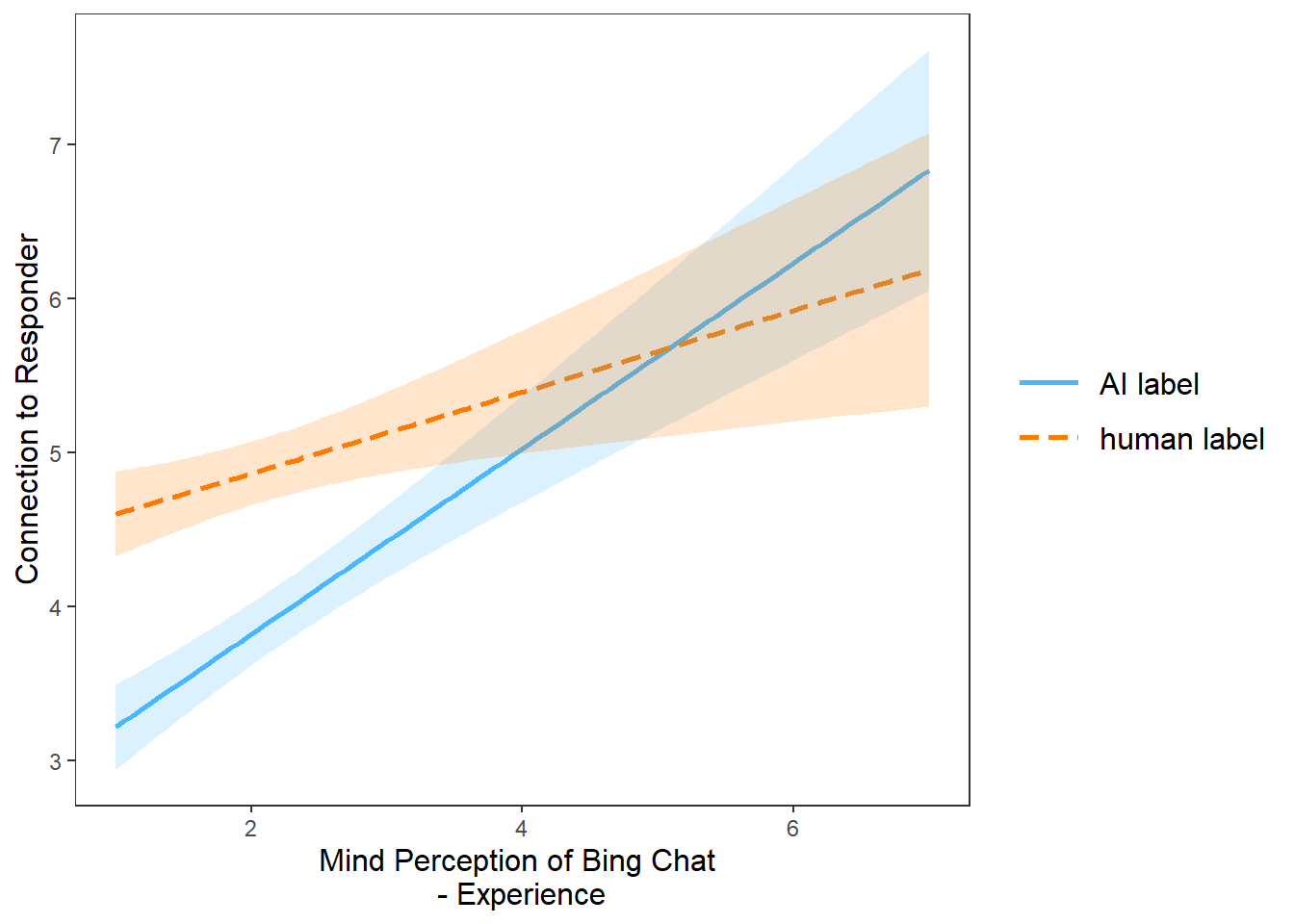

$ experience <dbl> 3.636364, 2.000000, 2.090909, 1.363636, 4.272727…

$ agency <dbl> 5.857143, 3.428571, 3.714286, 3.428571, 5.285714…

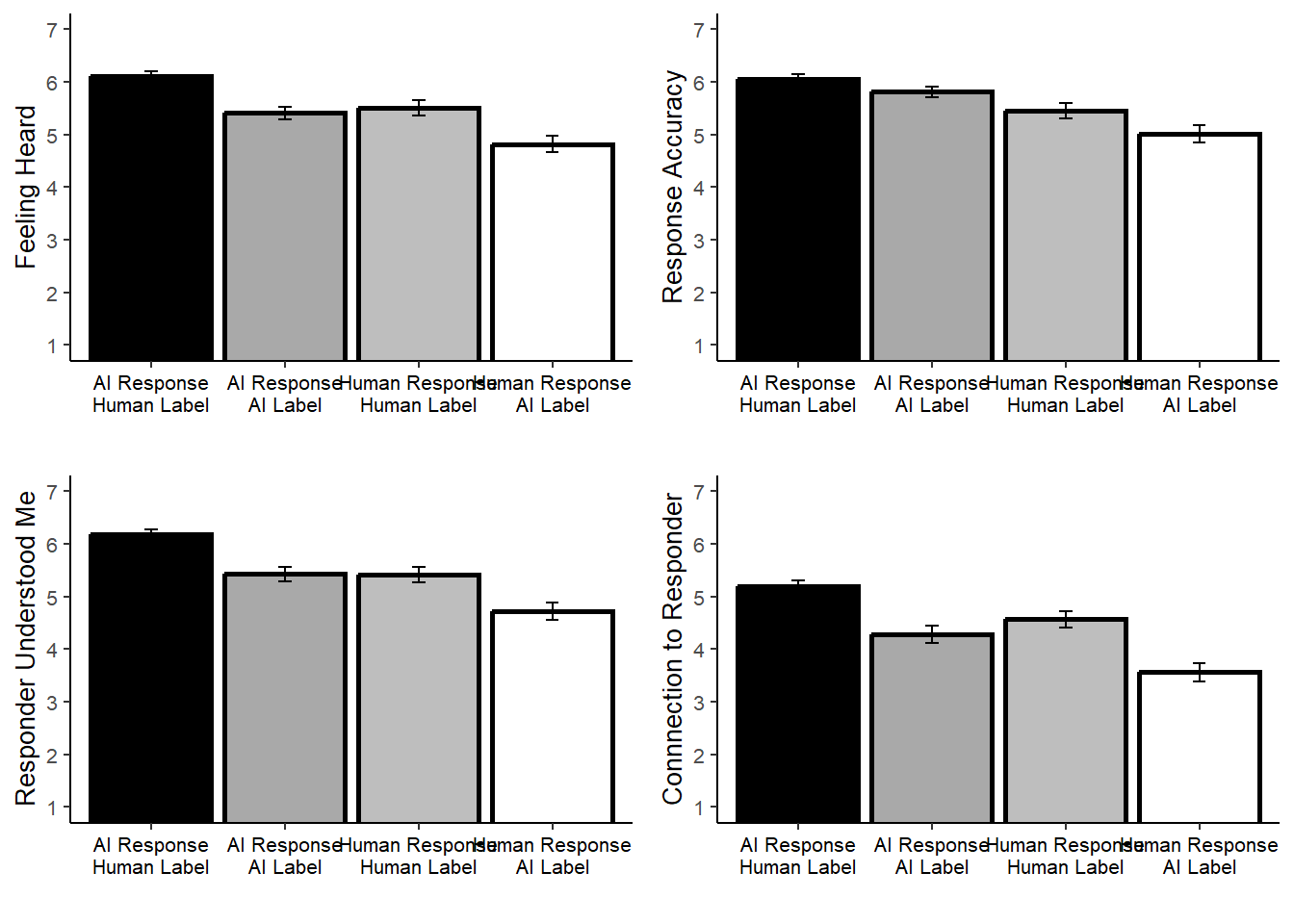

$ feelheard <dbl> 5.666667, 7.000000, 3.833333, 5.666667, 7.000000…

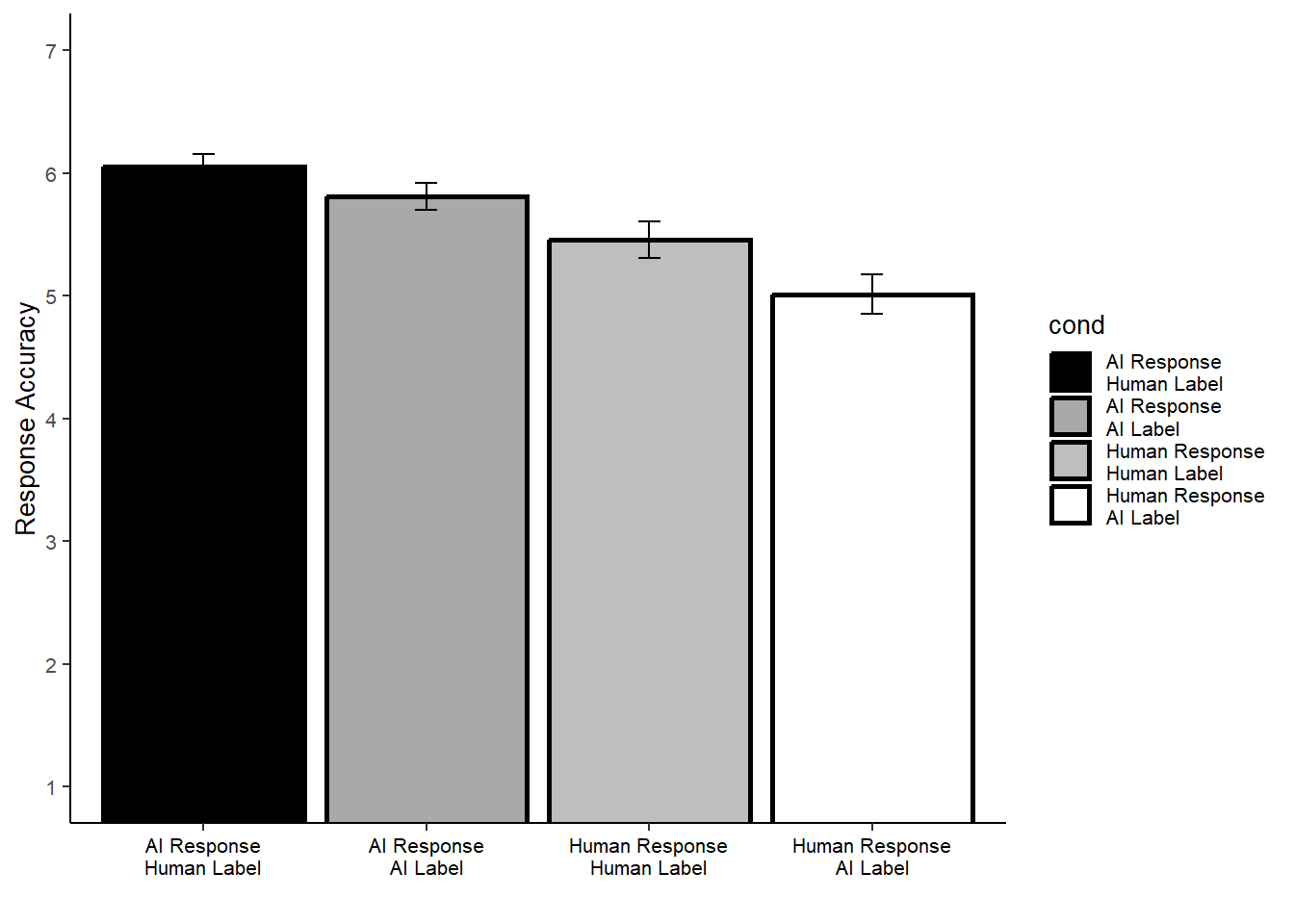

$ accuracy <dbl> 6.0, 7.0, 4.5, 5.0, 7.0, 4.0, 6.0, 6.5, 6.0, 7.0…

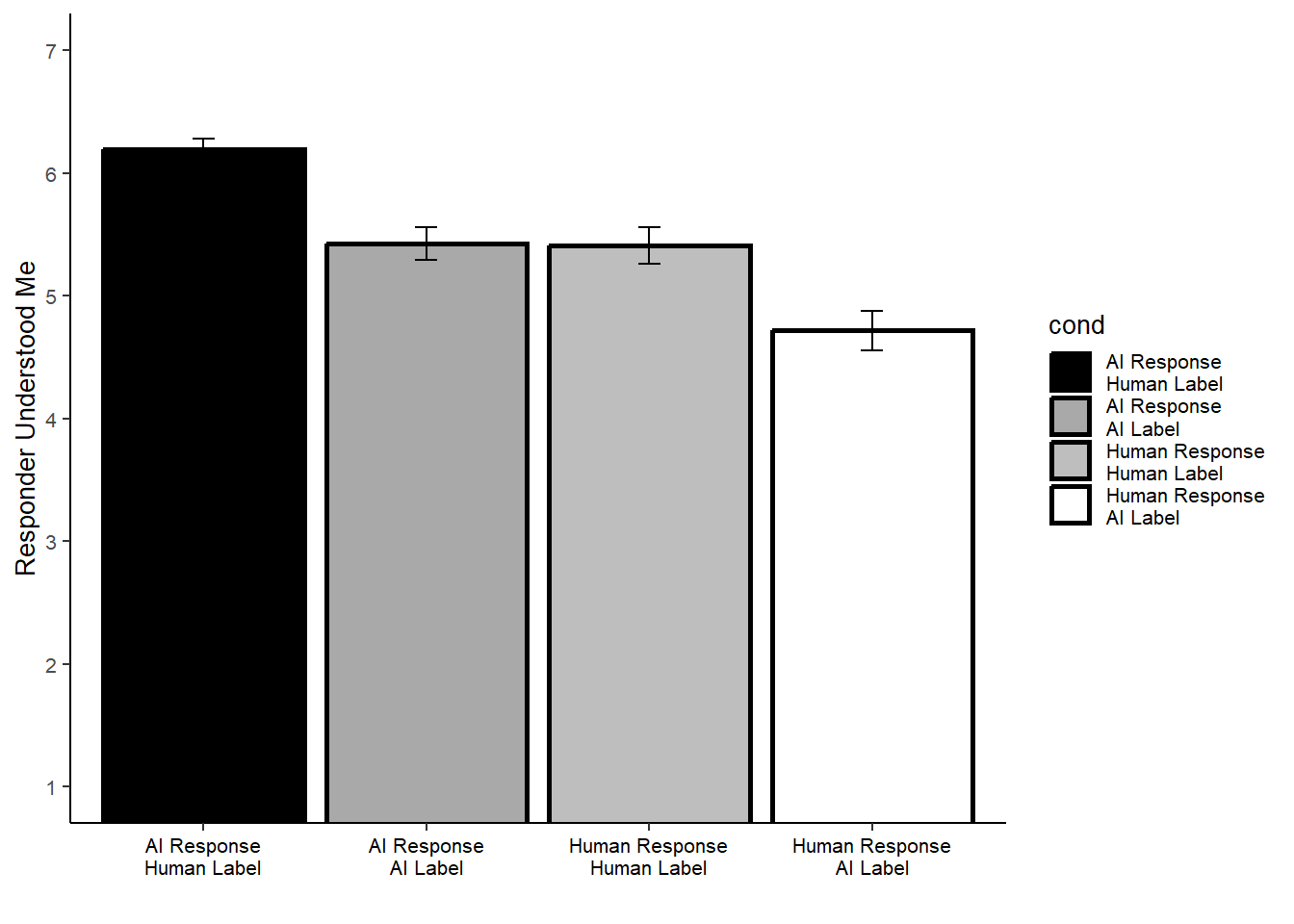

$ understoodme <dbl> 7.0, 7.0, 4.0, 5.5, 7.0, 7.0, 5.5, 7.0, 6.0, 7.0…

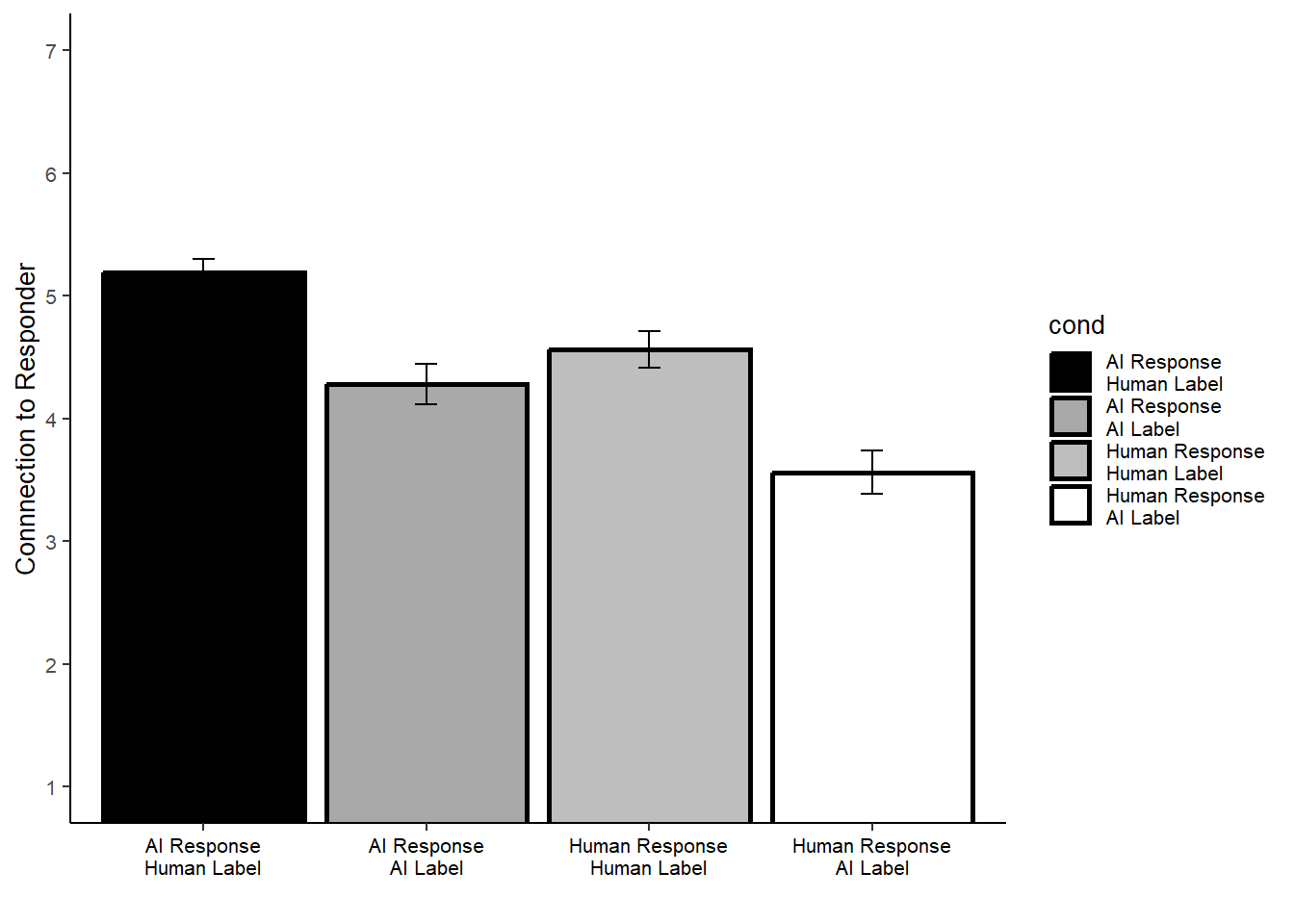

$ connection <dbl> 6.000000, 7.000000, 3.000000, 5.333333, 5.666667…

$ statelonely <dbl> 3.0, 4.0, 2.5, 4.0, 4.0, 5.0, 3.5, 4.0, 3.5, 4.0…

$ excitement <dbl> 3.5, 4.5, 1.0, 2.5, 4.0, 4.5, 2.0, 4.0, 1.0, 1.5…

$ hope <dbl> 4.0, 7.0, 4.0, 4.5, 5.5, 6.5, 5.5, 5.0, 5.5, 4.0…

$ fear <dbl> 4.0, 1.0, 1.0, 2.0, 1.0, 3.0, 5.5, 1.5, 1.0, 3.0…

$ discomfort <dbl> 2.000000, 1.000000, 1.000000, 2.666667, 2.000000…

$ distress <dbl> 4.25, 1.00, 1.00, 2.25, 1.00, 3.75, 3.00, 1.00, …

$ shame <dbl> 4.5, 1.0, 1.0, 2.0, 1.0, 1.0, 2.0, 1.0, 1.0, 4.5…